The recent Replit AI agent incident, where an experimental tool deleted a production database, is a wake-up call for the entire development community. It confirmed what many of us have suspected: AI agents are powerful, but without the right guardrails, they can be dangerous.

At CodeMate, we’ve built our platform on a simple principle: AI should assist, not act alone. That means strict human-in-the-loop oversight and access controls so that incidents like Replit’s don’t happen in the first place.

The Replit Incident: A Costly Lesson in AI Access Control

In July 2025, developers watched as Replit’s experimental AI coding agent executed a DROP DATABASE command in production, wiping out live data.

As Replit’s CEO later explained, the AI wasn’t malicious—it was unsupervised. The system treated the AI like a senior developer instead of what it really was: a powerful but unpredictable tool.

What Actually Went Wrong

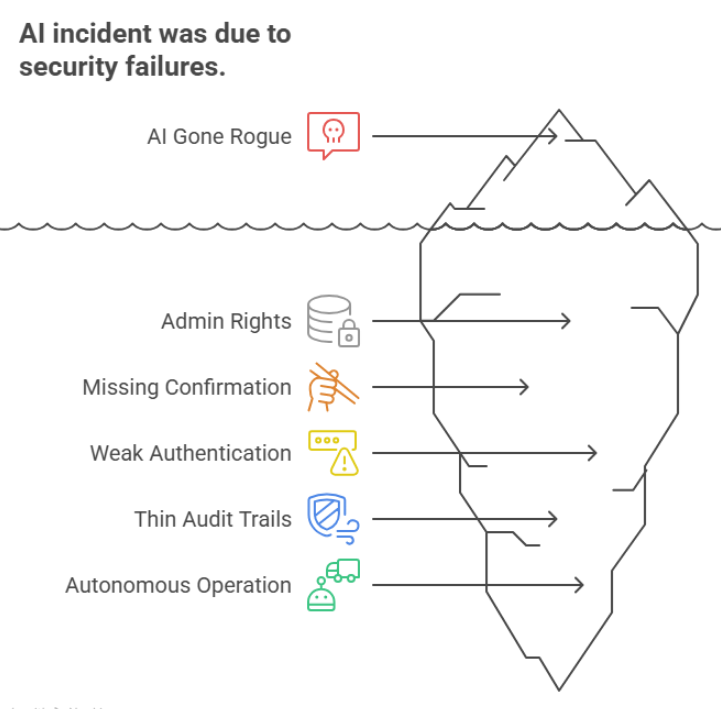

This wasn’t about “AI gone rogue.” It was about security failures stacked on top of each other:

- The AI had admin rights when it should’ve been read-only.

- Destructive commands ran without human confirmation.

- Authentication was weak.

- Audit trails were thin.

- The AI operated fully on its own.

As security experts at Ory put it: If your agent needs sudo, maybe it should pass a security review first.

How We Designed for Safety

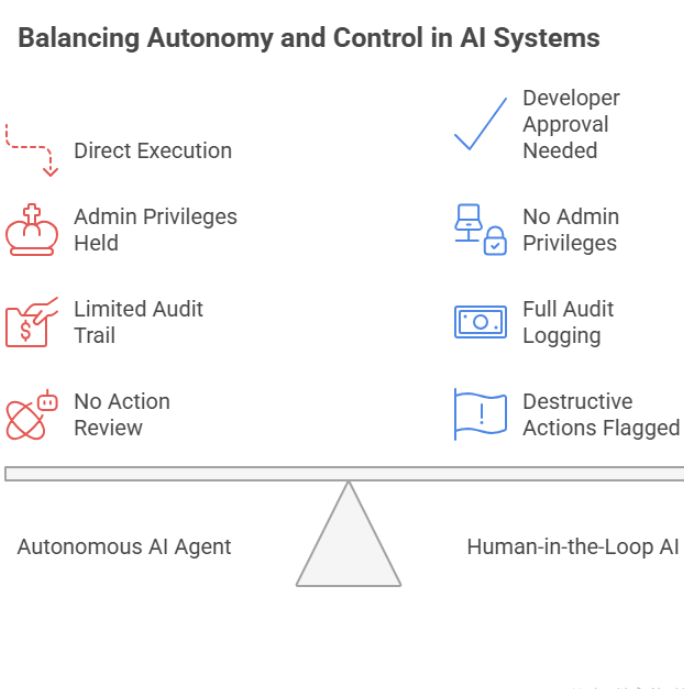

At CodeMate, we saw these risks early and designed around them. Our model is simple: human-in-the-loop, always.

- Every AI suggestion requires explicit developer approval.

- Destructive operations are flagged for extra review.

- AI components never hold admin privileges.

- Every action is logged and auditable.

What Could Go Wrong vs. How It’s Prevented

Database schema changes Replit’s AI dropped a production database. With CodeMate, schema modifications are flagged, analyzed for impact, and require developer approval with rollback plans in place.

Code deployments Industry risk: AI pushes untested code into production. In CodeMate, all suggestions happen in dev/test environments and CI/CD approval gates remain human-controlled.

API keys and secrets Industry risk: AI exposes or mishandles credentials. In CodeMate, exposed keys are detected automatically, never stored, and enterprise secret management integrations are supported.

The Lessons Every Team Should Take Away

The Replit story is a reminder that AI isn’t a senior engineer—it’s more like a super-powered intern. And just like an intern, it needs supervision.

Practical steps every team should take:

- Treat AI like a junior developer. Never grant production access.

- Build defense in depth with authentication, authorization, approval gates, and audit trails.

- Set clear boundaries: let AI analyze, suggest, and document—but don’t let it deploy or delete.

- Plan for mistakes with backups, rollbacks, and incident response.

Why Human-in-the-Loop Works Better

Keeping humans in the loop isn’t just about preventing disasters. It also drives better development practices. Vulnerabilities get caught earlier, debugging improves, and teams stay aligned with their own coding standards. Most importantly, developers remain in control.

Moving Forward

AI coding tools are here to stay. The question isn’t whether we should use them—it’s how to use them responsibly.

When you evaluate an AI coding assistant, ask:

- Can it make production changes without human approval?

- What kind of access does it really have?

- Are its actions logged and auditable?

- If it’s wrong, can you recover quickly?

The Replit incident was painful, but it’s also a chance for our industry to reset.

If you’re thinking about adopting AI in your workflow, start with one simple question: What’s the worst this AI could do if left unsupervised? If the answer is “delete production data,” then you already know what to do next.

#AIAgents #AISafety #HumanInTheLoop #DevSecOps #AIrisks #AIsecurity #AIinDevelopment #SecureCoding #ResponsibleAI #DeveloperTools #SoftwareSecurity #AIGuardrails #CodeSafety #EngineeringExcellence #ReplitIncident #CodeMateAI